The holiday season is almost upon us, and so the biggest players in the tablet market finally have their latest flagships already available. The iPad Air, from Apple, and the Galaxy Note 10.1 2014 Edition, by Samsung, are some of the most interesting tablet flagships this holiday season. Both of them have very high-end specs, including high-resolution displays and very powerful processors, along with a (perhaps too) high price tag. But which one is worth your money the most?

| Apple iPad Air | Samsung Galaxy Note 10.1 (2014) | |

| Body | 240 x 169.5 x 7.5mm, 469g (Wi-Fi)/478g (LTE) | 243 x 171 x 7.9mm, 540g (Wi-Fi)/547g (LTE) |

| Display | 9.7" IPS LCD 2048 x 1536 (264ppi) | 10.1" TFT LCD 2560 x 1600 (299ppi) |

| Storage | 16/32/64 GB, 1 GB RAM | 16/32 GB, 3 GB RAM |

| Connectivity | Wi-Fi, GSM (2G), HSDPA (3G), LTE (4G) | Wi-Fi, GSM (2G), HSDPA (3G), LTE (4G) |

| Camera (Rear) | 5 MP with 1080p@30fps video, F/2.4 aperture, HDR, face detection | 8 MP with LED flash, face detection and 1080p@60fps video |

| Camera (Front) | 1.2 MP with 720p@30fps video and face detection | 2 MP with 1080p@30fps video |

| OS | iOS 7 | Android 4.3 Jelly Bean |

| Processor | Apple A7 (Dual-core Cyclone @ 1.4GHz + PowerVR G6430 @ 450MHz) | -Wi-Fi: Exynos 5420 (Quad-core Cortex-A15 @ 1.9GHz + Quad-core Cortex-A7 @ 1.3GHz + Mali-T628) -LTE: Qualcomm Snapdragon 800 MSM8974 (Quad-core Krait 400 @ 2.3GHz + Adreno 330 @ 450MHz) |

| Battery | Non-removable Li-Po 8,820 mAh Video playback time: 10hrs |

Non-removable Li-Po 8,220 mAh Video playback time: 10hrs |

| Starting Price | $499 (16GB, Wi-Fi) | $549 (16GB, Wi-Fi) |

| Accessories | -- | S Pen |

Design

Both Samsung and Apple have produced good designs for their flagship tablets, but it'll come down to the usual plastic vs aluminium debate, or in this case faux leather vs aluminium. While the iPad Air maintains its all-aluminium design, this time inspired on the iPad mini rather than the previous iPad, Samsung has done the same as it did with the Note III, replacing glossy plastic with a back casing that is still plastic, but is now disguised as leather. I'm not sure I appreciate the faux leather design at all. Personally, not only do I prefer the iPad's aluminium construction, but I think the faux leather looks so old-fashioned that even the glossy plastic they used previously may look better. That's just my opinion though, and ultimately it'll come down to personal taste. At least the faux leather gives the Galaxy Note 10.1 more grip than the iPad Air. The Galaxy Note 10.1 is available in black and white (bezel color included), and the iPad Air is similarly available in "Space" gray and silver.

Both the Galaxy Note 10.1 and the iPad Air are remarkably thin and light. They are in fact one of the thinnest and lightest tablets available, but the iPad Air is definitely the winner in this department. It's technically thinner than the Note 10.1 (7.5mm vs 7.9mm), but the difference is so small it's practically unnoticeable to the user. While their thickness is one the same level, the iPad Air is significantly lighter than the Note 10.1 (469g vs 540g). In this case the difference in weight is definitely noticeable. The Note 10.1 is still lighter than most other tablets, though.

Display

The display is possibly the area where these two tablets fare the best. Both are large, crisp, bright, and colorful. The iPad Air, much like two of its predecessors, has a 9.7" display with a 4:3 aspect ratio and a resolution of 2048 x 1536, which gives the screen 264ppi pixel density. The Note 10.1 has, like the name implies, a 10.1" display with a 16:10 aspect ratio that packs 2560 x 1600 pixels and has a pixel density of 299ppi.

Perhaps the most fundamental difference between the displays is the aspect ratio. The almost-square 4:3 display in the iPad Air makes it better to use in portrait mode, and is more suited for reading and web browsing, while the wide 16:10 display in the Note 10.1 makes it better suited for usage in landscape mode, and frankly makes portrait mode use a bit awkward, but is generally better for watching videos.

You may think that the difference between 264ppi and 299ppi is huge, but honestly, it's hard to notice the Galaxy Note 10.1 being any crisper than the iPad Air, especially at the usual viewing distance. The difference is there, however, and any eagle-eyed person would probably notice a slight difference in sharpness.

Leaving the numbers and quantitative data aside, both the Note 10.1's and the iPad Air's displays are sufficiently bright. Viewing angles are good, as is expected of any half-decent tablet these days, and colors are accurate and satisfyingly saturated in both tablets.

Performance

Both of these tablets have the most powerful processors available to handle their ultra high-resolution duties. On the iPad Air we have the same A7 SoC found in the iPhone 5s and the Retina iPad mini, and on the Galaxy Note 10.1 we have either the Snapdragon 800 or a rare Exynos 5 Octa (5420) SoC for the LTE and Wi-FI models, respectively. All of these SoCs are built on 28nm process node to keep power consumption lower.

The A7's CPU technology has gained quite a bit of popularity since its launch back in September. That's because its the first CPU to utilize the ARMv8 ISA, which happens to be a 64-bit architecture, hence also making it the first 64-bit mobile SoC. Apart from the new ISA, Apple made its new Cyclone CPU core the widest mobile CPU ever seen. With all that power packed into a single core, Apple needed no more than two of those cores with a relatively low 1.4GHz clock speed to match its competitors' performance. As benchmarks show, the dual-core Cyclone CPU @ 1.4GHz is perfectly capable of competing with the latest quad-core beasts, and since it packs much more power on a single core, the A7 really stands out from its competitors in single-threaded CPU benchmarks.

The CPU in the Exynos 5420 SoC in the Wi-Fi Galaxy Note 10.1 is one of the few CPUs to ultilize ARM's big.LITTLE technology. Based on the ARMv7 32-bit ISA, the Exynos 5420 contains two CPU clusters, one high-performance cluster to handle demanding tasks, and a low-power cluster for handling lighter tasks while reducing power consumption.The high-performance cluster contains four Cortex-A15 cores clocked at 1.9GHz, while the low-power cluster has four Cortex-A7 cores @ 1.3GHz.

The Qualcomm Snapdragon 800 variant of the Galaxy Note 10.1 (the LTE version) has, like Apple, a custom CPU core dubbed Krait 400, based on the ARMv7 32-bit ISA. The Snapdragon 800 has four Krait 400 cores with an insane 2.3GHz clock speed.

Like I said before, since the A7's Cyclone CPU has a 64-bit architecture and is wider than all of its competitors, it manages a much higher score in single-threaded CPU benchmarks. However, in multi-threaded applications the A7 has the disadvantage of having fewer cores compared to its competitors, however it can still definitely keep up with its quad-core competition. The multi-threaded test puts the A7 very close to the Exynos 5420, but both processors lag behind the Snapdragon 800.

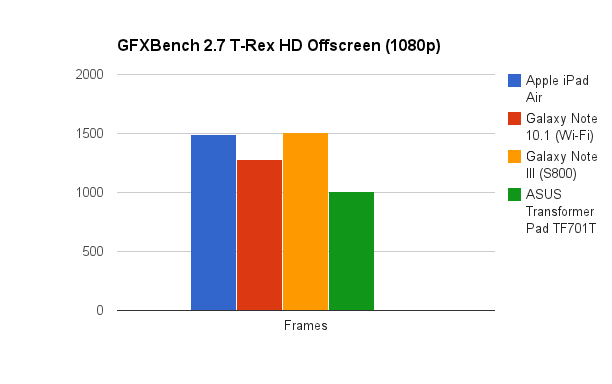

With the CPU out of the way, let's focus on the GPU of the Galaxy Note 10.1 and the iPad Air. The A7 SoC follows Apple's tradition of licensing GPUs only from ImgTech, and so we have a PowerVR G6430 graphics processor in the A7. On the Wi-Fi Note 10.1's Exynos 5420 processor there's an ARM Mali-T628 GPU, and the LTE Note 10.1 has an Adreno 330 GPU. All of these GPUs are among the most powerful mobile GPUs available, so we turn to GFXBench to tell us which of these GPUs is the most powerful.

Note: Unfortunately there are no benchmark scores yet available for the Snapdragon 800-based Note 10.1, so I'm taking data from the closest match I could find, the Galaxy Note III with Snapdragon 800. However, I'll omit the Note III scores from the Onscreen tests due to the difference in resolution between the Note III and the Note 10.1.

The T-Rex HD Offscreen test shows the PowerVR G6430 in the iPad Air remarkably close to the Adreno 330, however the Mali-T628 GPU in the Wi-Fi Note 10.1 lags behind them both, but at least outperforms the NVIDIA Tegra 4 SoC in the ASUS Transformer Pad.

The lighter Egypt HD Offscreen test shows the iPad Air's GPU falling behing both the Wi-Fi Galaxy Note 10.1 and the Snapdragon 800-powered Galaxy Note III and puts the Snapdragon 800 at the top of the chart.

Note that since these two tests are rendered at a fixed, non-native resolution, the difference between the resolution of the Note 10.1 and the iPad Air don't affect the scores here.

The Onscreen tests illustrate how the 1 million more pixels that these two Android flagships have to push versus the iPad Air bog down their performance. The T-Rex HD test shows that the iPad Air managed a much higher score compared to the Exynos-based Galaxy Note 10.1 and the Tegra 4 ASUS Transformer Pad TF701T.

Since the Egypt HD test is much lighter than T-Rex HD the margin between the iPad Air and its competitors becomes narrower. However, it's still clear that the iPad Air, due to its significantly lower resolution, can push more frames than its 1600p Android competitors.

Until the Snapdragon 800-based LTE Galaxy Note 10.1 gets released there's no data to indicate how it compares to the iPad Air in the Onscreen tests, although if I were to guess, I'd say that, even though the Adreno 330 is slightly more powerful than the PowerVR G6430 in the Apple A7, its performance advantage still won't be able to offset the resolution difference between it and the iPad Air.

Usually, the Onscreen tests would mimic most accurately real world gaming performance, given that Android and iOS games tend to run at the device's native screen resolution, but since the iPad 3 developers have been going another way: For specific ultra high-res devices, in order to avoid performance issues, the game runs at a lower-than-native resolution and then upscales to the device's screen resolution. For example, a developer might program a game to run at 1920 x 1200 and then scale to 2560 x 1600 on the Galaxy Note 10.1 to keep framerates high. Given how the Galaxy Note 10.1's higher resolution obviously puts it behind its iPad competitor, it might be necessary for this sort of optimization to be made to keep a decent framerate in very demanding 3D games.

Conclusion

The Galaxy Note 10.1 and the iPad Air are in fact similar in many ways. They both have thin, light designs (although the plastic vs metal war continues with these flagships), displays with a very high resolution, large batteries and some of the best-performing SoCs available.

In hardware terms, the iPad Air and the Galaxy Note 10.1 2014 Edition are indeed very similar, so it'll probably come down to software to determine which one is best for you. With the Note 10.1, we have Android 4.3 (and soon enough 4.4) with Samsung's TouchWiz UI added on top, and the iPad Air obviously runs iOS 7.

The addition of the S Pen digitizer might make you choose the Note 10.1 over the iPad Air, but that'll be only if you really value the advantages that a stylus brings.

Selling for the usual $499 for the 16GB Wi-Fi version, the iPad Air is an expensive tablet, although not as expensive as the Galaxy Note 10.1, which sells for $549 for the 16GB Wi-Fi version. $549 is asking for a lot, so unless the S Pen is really useful for you or you really prefer the Android ecosystem, the iPad Air offers more bang for your buck than the Galaxy Note 10.1 2014 Edition.

.png)

.png)

.png)

.png)

.png)